Are you planning to become a Data Engineer by 2026? With the rise of AI, big data, and cloud computing, the demand for skilled data engineers is skyrocketing across the globe. Whether you’re starting from scratch or transitioning from another tech role, this blog outlines a step-by-step roadmap, divided month-wise, to help you master all the necessary tools, cloud platforms, and even AI components in just 6 months.

Why Choose a Career in Data Engineering?

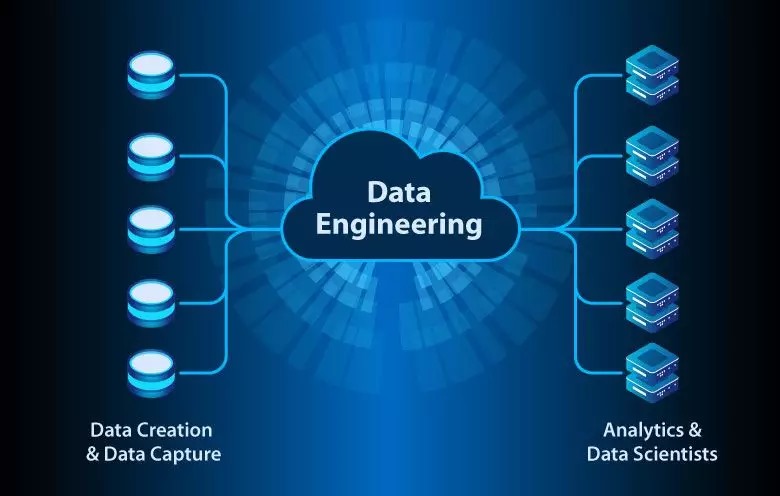

Data Engineering is the backbone of any data-driven organization. From building robust data pipelines to managing data lakes and integrating machine learning models, a Data Engineer ensures data is clean, structured, and accessible.

Average Salary:

- India: ₹10–30 LPA

- US: $100K – $160K

Who Can Follow This Roadmap?

- Fresh graduates (Engineering, CS, Math)

- Working professionals transitioning into data roles

- Aspiring AI/ML Engineers who want to understand backend data flows

- Cloud engineers who want to upskill

6-Month Detailed Roadmap to Become a Data Engineer (Cloud + AI Ready)

Month 1: Core Python & SQL Mastery

Skills to Learn:

- Python Basics (Data Types, Loops, Functions, OOP)

- Data Structures (List, Dict, Set, Tuple)

- Error Handling & Logging

- SQL (Joins, Subqueries, Aggregations, Window Functions)

Tools to Practice:

- Jupyter Notebook / VS Code

- MySQL or PostgreSQL

Recommended Courses:

- “Python for Everybody” by Dr. Chuck

- “SQL for Data Science” – Coursera

Month 2: Data Manipulation & ETL Basics

Skills to Learn:

- Pandas & NumPy

- Data Cleaning Techniques

- Introduction to ETL (Extract, Transform, Load)

- APIs and Web Scraping (Optional)

Tools to Practice:

Airflow (ETL scheduling)

Pandas Beautiful Soup / Requests (for scraping)

Mini Project Idea:

- Build an ETL pipeline that extracts COVID-19 data from an API and stores it in a SQL database.

Month 3: Cloud Foundations (AWS, Azure, GCP)

Skills to Learn:

- Basic Cloud Concepts (IAM, S3, Compute, Networking)

- AWS: S3, Lambda, Glue, RDS

- GCP: BigQuery, Cloud Functions

- Azure: Data Factory, Blob Storage

Tools & Services:

- AWS Free Tier or GCP/Azure Trial Accounts

- Terraform (Optional – for IaC)

Suggested Learning Paths:

- AWS Cloud Practitioner + AWS Data Engineer Learning Path

- GCP Data Engineer Path – Google Cloud Skill Boost

Month 4: Big Data Ecosystem & Distributed Systems

Skills to Learn:

- Hadoop (Basic understanding)

- Spark (Core, SQL, Streaming)

- Kafka (Event streaming)

- Parquet, Avro, ORC file formats

Tools to Practice:

- PySpark

- Apache Kafka

- Hadoop HDFS

Mini Project Idea:

Stream live Twitter data using Kafka, process it with Spark Streaming, and store it in a database.

Month 5: Data Warehousing & Pipeline Orchestration

Skills to Learn:

- Data Warehousing Concepts (OLAP, OLTP)

- Snowflake, Redshift, BigQuery

- Airflow for Workflow Management

- CI/CD for Data Pipelines

Tools to Learn:

- dbt (Data Build Tool)

- Apache Airflow

- Git + GitHub Actions

Mini Project Idea:

- End-to-end pipeline: Ingest → Clean → Transform → Load → Visualize in BI tool

Month 6: AI/ML Integration + Real-Time Projects

Skills to Learn:

- ML Models Deployment (Optional but valuable)

- MLflow for tracking models

- Feature Engineering in Pipelines

- Real-Time Analytics

AI Tools:

- TensorFlow / PyTorch (only basics)

- AutoML with AWS/GCP/Azure

- Vertex AI (GCP) or SageMaker (AWS)

Capstone Project:

- Build a Data Engineering Pipeline that processes real-time financial data, transforms it, stores it in a data warehouse, and triggers an ML model to predict stock trends.

Bonus: Cloud Certification Suggestions (Highly Recommended)

| Platform | Certification | Purpose |

|---|---|---|

| AWS | AWS Certified Data Analytics – Specialty | Mastery in Big Data & AWS Pipelines |

| Azure | Microsoft DP-203 | Data Engineering on Azure |

| GCP | Google Cloud Certified Data Engineer | Industry-standard GCP data engineer cert |

Key Tools to Master

| Category | Tools & Frameworks |

|---|---|

| Programming | Python, SQL |

| Data Processing | Pandas, Spark, Kafka |

| Cloud Platforms | AWS, Azure, GCP |

| Databases | MySQL, PostgreSQL, MongoDB, Snowflake |

| Workflow Orchestration | Apache Airflow, dbt |

| DevOps for Data | Git, Docker, Kubernetes (optional) |

| AI/ML | MLflow, AutoML, TensorFlow (basic only) |

| BI/Reporting | Power BI, Tableau, Looker |

Daily & Weekly Study Plan

| Time Period | Weekly Focus | Hours Needed |

|---|---|---|

| Weekdays | 2 hours/day (practice + courses) | 10 hours |

| Weekends | 5 hours/project or revision | 10 hours |

| Total | Consistent 20 hours/week = 500+ hours |

Pro Tips for Success

- Build 4–5 real-world data projects for GitHub.

- Maintain a strong LinkedIn portfolio and write blogs explaining your projects.

- Contribute to open-source data engineering tools like Airflow or dbt.

- Join Reddit, Stack Overflow, and Discord communities for peer support.

- Subscribe to newsletters like Data Engineering Weekly or O’Reilly Radar.

Final Words

With this 6-month structured roadmap, commitment, and consistent hands-on practice, you’ll be ready to land a high-paying Data Engineering job by 2026 — equipped with not only cloud and big data mastery but also AI-readiness.

💬 Want a customized resume or portfolio template for Data Engineers? Drop a comment below!

#DataEngineer #CloudComputing #AIinData #BigDataRoadmap #AWS #GCP #Azure #Airflow #Spark #SQL #DataPipeline #Revuteck #thinqnxt

0 Comments